The Mysterious Case of Soft 404s on a Shopify Site

While working on a recent project, we faced an unusual case of dozens of incorrect soft 404s. These misclassifications aren’t uncommon, but this time, something didn’t add up.

After a month of meticulous investigation, our suspicions were confirmed, we found a way to fix the problem, and in the process, we discovered repeatable strategies for figuring it out.

If you’re an SEO dealing with unexplainable, recurring soft 404s, here’s how we identified the issue and implemented a fix. Let’s dive into the process step by step, breaking down the problem, our methods, and the solution that got everything back on track.

URL Inspection Failures

Spikes in Soft 404s

Once we noticed the spike in soft 404s, we inspected the affected pages. They appeared functional—products were visible and there were no error messages. Here’s what we did next:

- We checked Google Search Console’s URL Inspection tool. Crawled pages showed “not indexed” due to soft 404s, but live tests showed working, indexable pages. Typically, when a page triggers a soft 404, both the crawled and live test versions display errors. This discrepancy was our first clue that something was off.

- Not only did we as users see a live page, Google saw a live page with products and content that it claimed was indexable, but only with a live test through the Google URL Inspection Tool. We requested indexing on these pages, but this did nothing to change the outcome, despite seeing the crawls come in shortly after. This pointed to a problem with Google’s processing of the pages, and we suspected there might be an issue with resource loading.

- Using network blocks, we discovered that if one of the chunked JS files failed to load, the entire site would break and content would not be present. An error message would be displayed and this would be enough to trigger the soft 404. However, we had no proof that Google was not crawling the JS files; it was just a theory.

- Finally, after around 100 tests, the GSC URL Inspection Live Test screenshot showed a blank page, with a corresponding soft 404 error callout. In the resources tab, we discovered that the chunked JS files hadn’t loaded due to an “Other Error.”

What Does “Other Error” Mean?

No surprise here, but there’s little information about what “Other Error” means in URL Inspection. In practice, though, it indicates that Google declined to load a file. Based on experience and input from Barry Hunter, these errors often relate to crawl budget issues.

In our case, a spider trap had created thousands of URLs that had a 200 status code, but were useless to users and were only crawled by Google. The soft 404 issue came up shortly after this occurred. The timing was suspicious, and this influx of URLs could play into a crawl budget issue resulting in “Other Error” issues on other pages.

A note on third-party domains and crawl budget

Google says that each domain has a set amount of crawl budget. You might assume that since these files load on the Shopify CDN, you can’t do anything about it, and if there is an issue, it should be widespread across multiple sites.

We were able to confirm no issues with other Shopify sites (we had GSC access for), meaning this didn’t seem to be a Shopify issue. It’s a Google-doesn’t-want-to-load-the-file issue. We tend to find that the more resources a page loads (whether first party or third party), the more likely it is for one or more “Other Errors” to occur, which can cause problems for Google to fully load, crawl, and correctly index your site’s pages.

Although it’s tough to draw a definite conclusion, our suspicion is that Google factors in the client site’s crawl budget and the amount of resources needed to load it, but the third-party host (Shopify’s CDN in this case) doesn’t necessarily have an impact. More testing is needed to confirm this.

Chunked JS Files: Another Straw on the Camel's Back

The spider trap led us to believe that the crawl budget was being impacted, so we checked those files next. We knew from previous experience that Google doesn’t load all files and we suspected that the time and resources spent on the new spider trap URLs may have something to do with Google not loading all page resources.

Chunked JS files (splitting JavaScript into smaller chunks for efficiency) are often used to improve site performance, as recommended by Google. However, there’s a critical flaw when it comes to Googlebot: If even one chunked file fails to load, it can prevent the site from rendering critical content. For search engines, this might mean serving an error page instead of your actual content, leading to issues like soft 404s.

In our case, testing revealed that blocking a single chunked JS file caused the site to display only an error message for pages. When Googlebot encountered this, it flagged some resources with an “Other Error,” effectively resulting in pages being treated as soft 404s. While some sites handle missing chunks more gracefully, our client’s sites’ reliance on all files loading perfectly created a higher risk of failure. (OHGM wrote an insightful piece about this a few years ago.)

Note: We’ve tested other sites and have seen cases where even multiple blocked chunked JS files did not hinder the site to the degree that we saw with this problem, so there is always the possibility that you won’t have the same critical failure point, which is why you’ll always want to test.

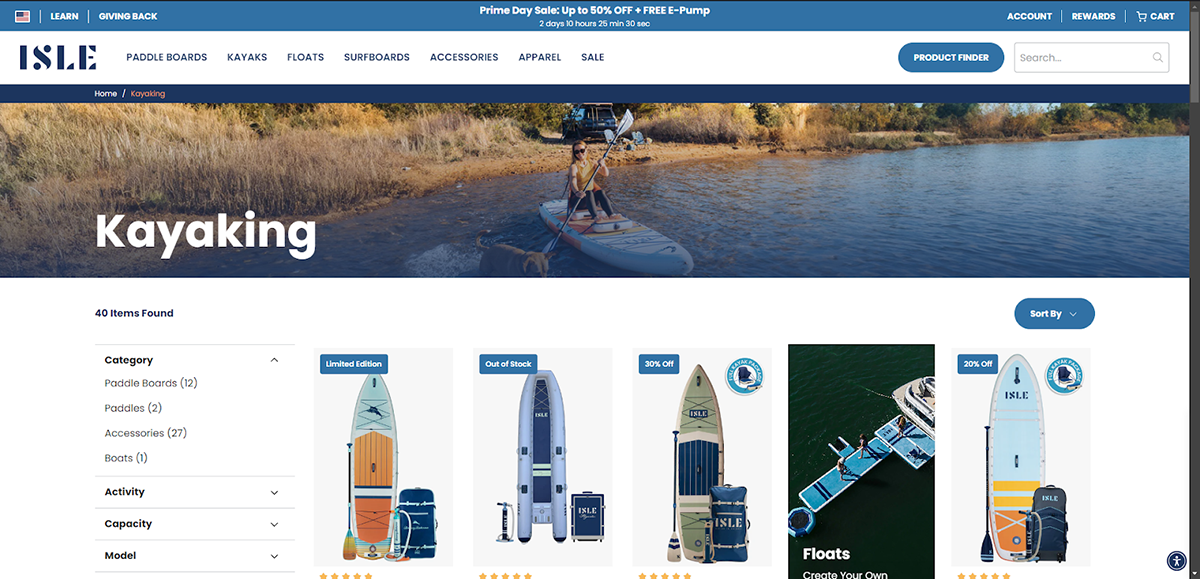

We were able to test this by network blocking the individual JS files and watching the results.

→ If one of the chunked files did not load, the entire site would only display an error message.

→ When we finally saw that Googlebot was giving “Other Error”s as the reason why the chunked JS files did not load, we knew that it only saw an error page and would (accurately) believe this was a soft 404.

Our Resolution

Due to timing and other constraints, we couldn’t implement prerendering. Instead, we:

- Blocked spider trap URLs in robots.txt to prevent Googlebot from wasting crawl budget.

- Collaborated with the Dev team to implement fixes on specific types of pages that we suspected were causing issues.

Ultimately, Googlebot resumed loading the chunked JS files. While it’s hard to pinpoint the exact fix, the robots.txt update likely played a key role by freeing up crawl budget.

This case highlights the complexities of diagnosing and fixing soft 404 errors. By methodically testing assumptions and addressing crawl budget inefficiencies, we were able to resolve the issue and restore proper indexing. If you’re facing similar challenges, start with a detailed inspection and tackle one variable at a time. You might be surprised at what makes the difference.

How can you fix recurring Soft 404s?

If you’re dealing with soft 404 errors from files not loading, there are several solutions worth exploring. This isn’t about pages discussing error codes or containing language that could be mistaken for soft 404s—it’s about mitigating the risk of Googlebot misinterpreting your pages due to missing content.

Before we jump into the options, note that, while Googlebot can render JavaScript, we’ve seen enough cases where it only partially does, so don’t assume that your content is being fully processed all the time. Rather than relying on Google, we highly recommend going with one of the following options in order to mitigate the risk of losing traffic and rankings due to Googlebot failing to fully render the JavaScript.

Server-side rendering (SSR) is ideal if you frequently update your products or content. With SSR, the server does the heavy lifting and provides a complete HTML page to a user once requested. Bots will always get the full page content and you won’t have to worry about any files failing to load.

Prerendering creates HTML files in advance, rather than dynamically generating them upon request like SSR. Prerendering allows bots to see the full page without depending on JavaScript to load critical elements. It’s a solid choice if your content remains relatively static throughout the day.

Hydration is a best-of-both-worlds situation. The server sends the static HTML, it is “hydrated,” and JavaScript is run to provide interactive functionality and potentially additional content. As long as the initial HTML includes all critical information (like products or key content), hydration is an effective approach. Bonus: it’s also very popular with developers.

Compared to Hydration, partial hydration shifts more of the load to JavaScript, which would add most of the content and interactive functionality after the initial HTML is loaded. While it’s better than full client-side rendering, it’s not as foolproof as the previous options. As long as you can get some critical HTML added in, such as a row of products and a bit of content, you’ll likely be fine, but personally, I prefer the previous 3 options.

If none of the above methods are available to you, consider reducing the overall number of resources and placing your critical JavaScript into a single file hosted on your domain. Fewer files mean fewer potential failure points. However, this is more of a stopgap solution and problems may still arise. It’s better to prioritize SSR, prerendering, or hydration when possible.

Keep in mind that consolidating javascript into fewer files can also reduce network requests. The volume of network requests is important for Google to balance so as not to overwhelm servers when crawling. You can also focus on including critical javascript this way, so that the most important code for content, links and page layout, is loaded first and the less important javascript for user-interaction comes last, hence if Google does throw an “Other Error” it could be less impactful.

In some cases, reverting to a single JavaScript file might be necessary. While this sacrifices performance, it could prevent soft 404s from wreaking havoc on your traffic. Use this only as a last-ditch effort if other options aren’t feasible.

What tools should you use to analyze a Soft 404 issue?

To pinpoint the issue, you’ll need Google Search Console (GSC) and your browser’s DevTools Network tab. Here’s how to use them:

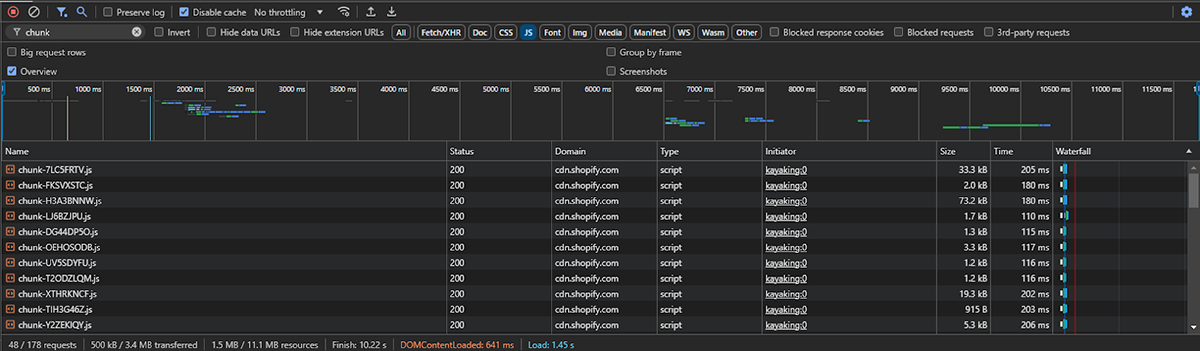

DevTools

- Use the Network tab to review JavaScript files, looking specifically for chunked JavaScript files

- These files will likely say ‘chunk’ in them, especially if working with a Shopify site

- They may repeat a file name with an added id string, i.e., main-112541d.js and main-sag123.js

- Use the “Block Request URL” feature to block individual scripts from loading in order to see how they impact the page load. If critical content doesn’t load, this could trigger a soft 404.

URL Inspection in GSC

- Perform a live test and review the Resources tab for flagged JS files labeled as “Other Error.”

- Note that GSC’s live test often loads more resources than Googlebot typically does during regular crawls. If the live test succeeds but soft 404 errors persist, it’s likely due to failed JS file loads.

A note on the differences between Googlebot and Googlebot URL Inspection

One of the difficult things we experienced when trying to diagnose this issue was that the URL Inspection live test showed the page working every time outside of that one singular instance where it did not.

It always loaded the resources, and while it sometimes returned “Other Error”s for files, it was primarily for images. Typically we’ve found that the Inspection Test tends to load more resources than the indexed version of the page. While I do generally trust what the URL Inspection Tool provides and it’s great for diagnosing issues, the discrepancy in resource loading, especially when trying to diagnose an issue with Other Error and Soft 404s, makes it a little less reliable.

Example Walkthrough: Analyzing ISLE Surf & SUP

Let’s use the ISLE Surf & SUP website as an example. While we have no affiliation with them, their site demonstrates chunked JavaScript files in action.

Now, before we perform a network block, let’s see what the site shows:

The product list disappears, displaying “0 items found.” This is enough to trigger a soft 404. If Googlebot fails to load one of these chunked JS files, this is what Googlebot will see.

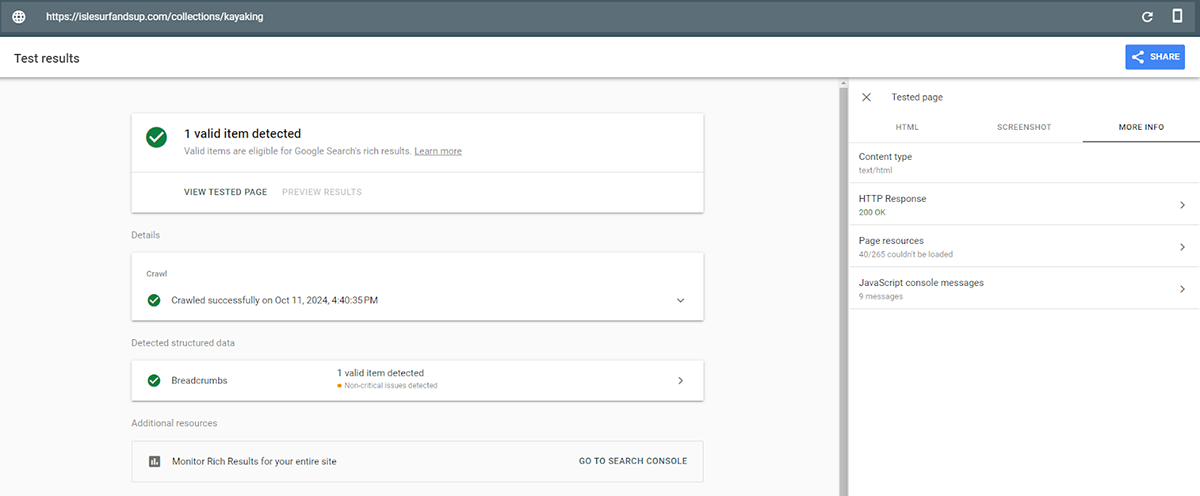

We’ll now use Rich Results Tester’s URL Inspection data. (We’re only using this because we don’t have access to GSC for this site.) If we run ISLE through this test, we can see that it did not load 40 out of 265 Resources.

For reference, you’d click on View Tested Page -> More Info -> Page resources in order to view the resources.

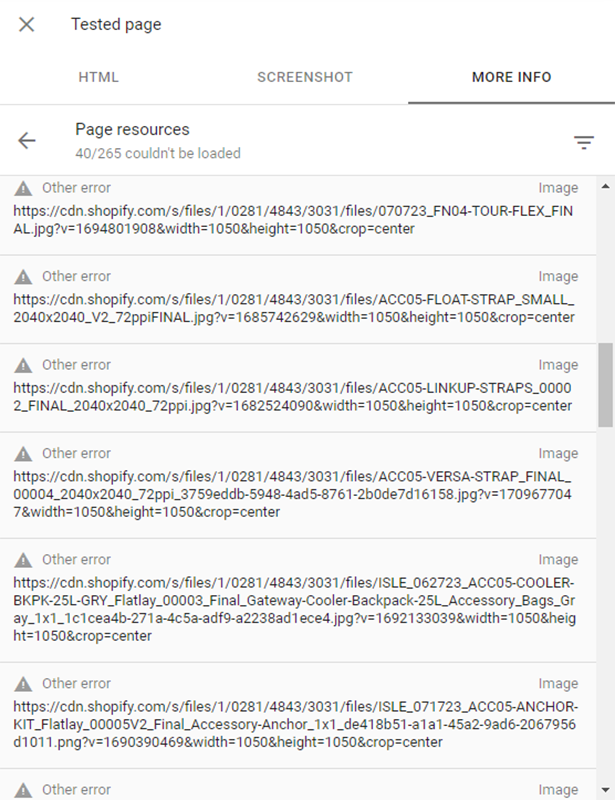

You can see some as “Other Error” already for images (this is the window that pops out when you click Page resources)..

If you see “Other Error” show up for any JavaScript files here, you’d check them by network blocking. There may be cases where additional critical JavaScript files don’t load either, so check all of your JavaScript files just to be safe. If you have a lot of resources, consider copying and pasting the resources into something like notepad++ or Excel to clean it up and quickly narrow down to the scripts.

Keep in mind that the URL Inspection Test may very well show you that these files load because it tends to load more resources than the regular crawler does. If it says this, but you’re still seeing a soft 404 error in Google Search Console, this is likely your culprit.

To see an example of a site that handles this well, visit Chubbies Shorts: You can block every chunked JS file and the site still works. It looks like they use Hydration here, as there is some lost functionality, but it’s a great example to give a developer if you’re making a push to preemptively fix this issue.

Conclusion

Soft 404 errors can devastate your site’s traffic and rankings. While the ultimate goal is to use prerendering, server-side rendering, or hydration, analyzing and resolving resource-loading issues is an essential first step to determining the cause of the problem. By ensuring Googlebot can access your critical content, you’ll protect your site from preventable errors and keep your pages performing at their best.